What's stopping AI regulation?

Five key challenges for policymakers

Artificial intelligence (AI) influences many aspects of our lives.

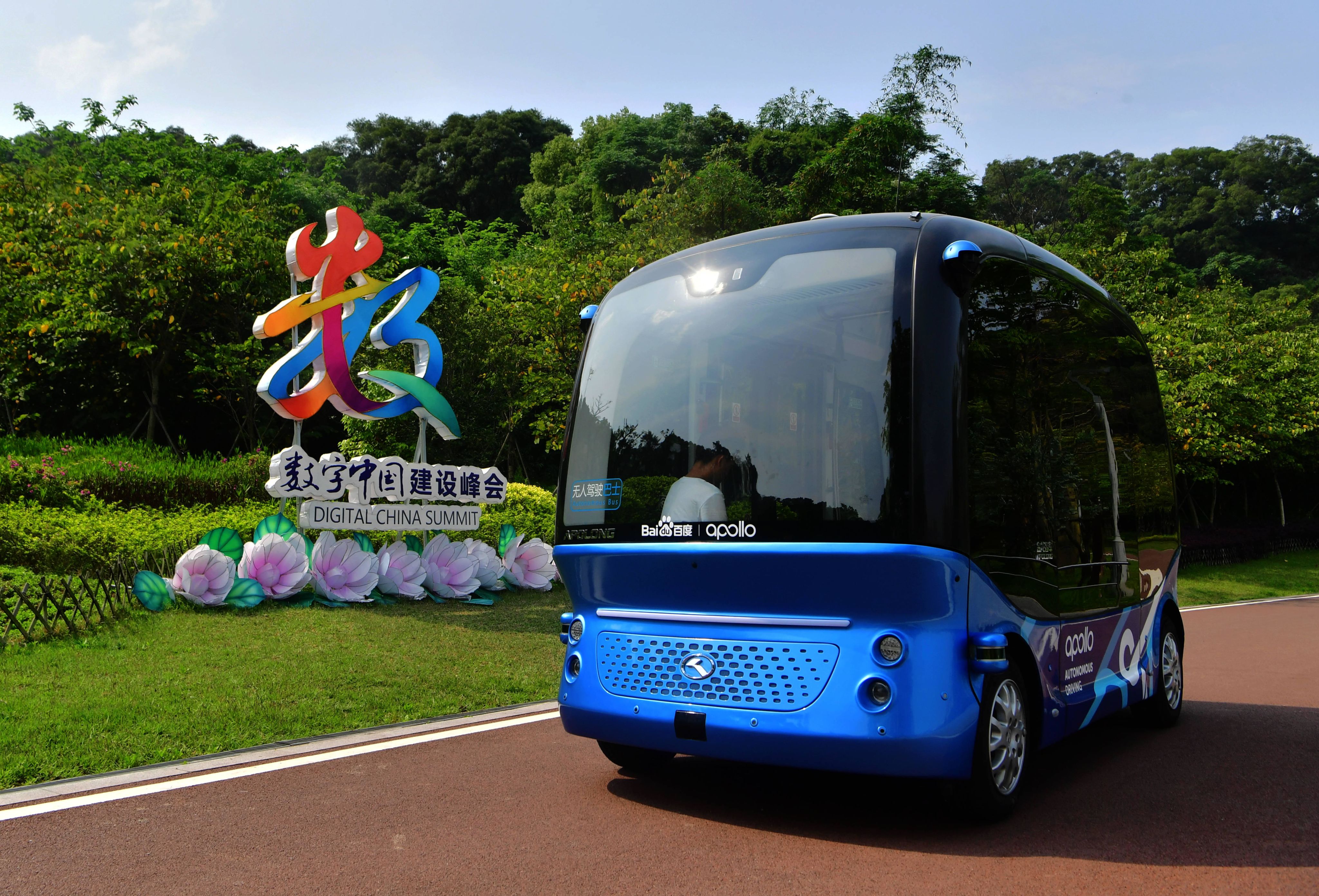

From powering search engines and analyzing medical images to predicting earthquakes and controlling autonomous vehicles, we increasingly rely on AI to carry out tasks that are too complex – or not cost-effective – for humans to do.

AI isn't new – scientists first developed the fundamental ideas behind it in the 1950s, defining it as "the science and engineering of making intelligent machines."

Yet, it's only recently that scientists have been able to implement these ideas. A major reason for this is that branches of AI such as "machine learning" (the science of making computers act without specific instructions) need a lot of computing power and a lot of data.

The modern world provides both.

But there are risks. AI applications can:

- Carry out many tasks traditionally done by humans, leading to rapid changes in the workplace and labor market

- Generate and distribute disinformation through fabricated news stories, documents, and "deep fake" images and videos

- Develop biases that harm specific groups and amplify inequalities

- Harm the planet because they can use enormous amounts of electricity, which may not always be from renewable sources

Some experts even warn of a future where we might find ourselves in a world controlled by AI.

AI has so far mostly been a self-regulated industry.

Now, though, governments and policymakers are working on AI regulations that aim to minimize the risks of AI while realizing its benefits.

This article highlights five key challenges for policymakers looking to regulate AI effectively:

- Defining AI

- Cross-border consensus

- Liability and responsibility

- The "pacing problem"

- Enabling innovation

We also highlight four guiding principles for AI regulation.

1. Defining AI

Many tools and applications fall under the umbrella term "AI."

They include generative AI technologies, which create outputs, as well as traditional (or conventional) AI, which typically automates tasks and detects patterns.

Some applications of AI – like configuring an email spam filter – are low risk. Others – like using AI programs to make decisions about justice, immigration, or military action – are high risk.

All this makes agreeing on a universal definition of AI a major challenge for regulators.

As an introduction to a special issue on the governance of AI in the Journal of European Public Policy highlights, "Many policy instruments employ only partial or preliminary definitions – or refrain from providing a definition at all."

There are still as many definitions of AI as there are people talking about it

The authors of a book chapter on the governance of AI agree: "Policy documents typically use AI as an umbrella term that includes machine learning, algorithms, autonomous systems, and other related terms."

Technological change

The rapid pace of technological change also makes it difficult to decide on a definition that doesn't date quickly.

"This is referred to as the so-called 'AI effect,'" says Nathalie A. Smuha in "From a 'race to AI' to a 'race to AI regulation': regulatory competition for artificial intelligence."

"Technologies that were initially deemed 'intelligent' but over time became normalized by habitual use and exposure, lose their ‘intelligent’ status."

Existing definitions of AI

The differences between existing definitions of AI highlight the challenge of agreeing on a universal definition of AI for regulators.

Intelligent behavior

The European Commission defines AI as follows:

"Systems that display intelligent behavior by analyzing their environment and taking actions – with some degree of autonomy – to achieve specific goals.

"AI-based systems can be purely software-based, acting in the virtual world (e.g., voice assistants, image analysis software, search engines, speech, and face recognition systems) or AI can be embedded in hardware devices (e.g., advanced robots, autonomous cars, drones or Internet of Things applications)."

Tasks requiring intelligence

The U.K. Government says: "AI can be defined as the use of digital technology to create systems capable of performing tasks commonly thought to require intelligence."

Machine-based decisions

The U.S. Artificial Intelligence Act, which aims to accelerate AI research and application, defines AI as "a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations or decisions influencing real or virtual environments."

Comparable with human intellect

Russia's 2019 national strategy for artificial intelligence defines AI as:

"A collection of technological solutions that allow one to simulate human cognitive processes (including self-learning and the search for solutions without using a previously supplied algorithm) and to get results, when accomplishing concrete tasks, that are at least comparable with those of human intellect."

Capacity for learning

In "Governing AI – attempting to herd cats?," Tim Büthe et al. define AI as:

"Systems that combine means of gathering inputs from the external environment with technologies for processing these inputs and relating them algorithmically to previously stored data, allowing such systems to carry out a wide variety of tasks with some degree of autonomy, i.e., without simultaneous, ongoing human intervention.

"Their capacity for learning allows AI systems to solve problems and thus support, emulate or even improve upon human decision-making – though (at least at this point of technological development) not holistically but only with regard to well-specified, albeit possibly quite complex tasks."

Human cognitive capacity

Mark Nitzberg and John Zysman's definition in "Algorithms, data, and platforms: the diverse challenges of governing AI" is briefer:

"AI is technology that uses advanced computation to perform at human cognitive capacity in some task area."

2. Cross-border consensus

Because of the serious nature of the risks of AI, many are calling for international regulations, and efforts toward setting global regulatory standards for AI are already underway.

This won't be easy.

Competing nations

Many nations and blocs, including the U.S., China, EU, and U.K., see AI as a key driver of national and economic security. Indeed, one world leader recently claimed that "whoever becomes the leader in this space [AI] will become the ruler of the world."

Jing Cheng and Jinghan Zeng highlight how the AI rivalry between the U.S. and China is a barrier to cross-border AI regulations in "Shaping AI's Future? China in Global AI Governance":

"When it comes to AI, both [the] U.S. and China consider each other as the main competitor, if not rival.

"It is particularly difficult for both the U.S. and China, as geopolitical tensions are embedded in almost all aspects of the bilateral relations.

"Their geopolitical competition has often overridden the desire for transnational cooperation."

Equal access

Global AI regulations must consider the requirements of nations and regions that have little or no AI capabilities.

"Most ... existing [AI] strategies have been launched in Europe, North America, and major Asian powerhouses such as China, India, Japan, and South Korea, with very little activity in Africa, Latin America, and large parts of Asia," say Inga Ulnicane et al. in The Global Politics of Artificial Intelligence.

"These uneven developments around the world present limitations and potential challenges with AI policy and governance developments being concentrated in the most developed parts of the world."

National regulations and culture

Global regulations for AI must comply with existing laws, regulations, and cultures across numerous countries.

In "Algorithms, data, and platforms: the diverse challenges of governing AI," Mark Nitzberg and John Zysman say: "The overriding question will be how to create some system of interoperability so that tools and applications can work across boundaries while recognizing and respecting diverging preferences.

"For example, in Germany, property owners have a right to exclude their building façades from Google’s globally accessible 'Street View' system, whereas Americans have no such right."

The laws on how the data that AI systems feed on is collected and stored also differ from country to country.

As AI-enabled technology operates across markets, it's likely to relate to many other laws, too.

"The development and deployment of AI can be stimulated or curtailed by domains as different as tax law, tort law, privacy and data protection law, IP law, competition law, health law, public procurement law, consumer protection law ... the list goes on," says Nathalie A. Smuha.

3. Liability and responsibility

Unlike humans, AI systems have no rights or responsibilities and aren't accountable for their actions and decisions.

So, who is responsible for the actions of an AI system? What if an AI system creates another AI system?

Regulators need robust answers to these questions.

The rise of autonomous machines ... raises the possibility that they, rather than a human designer, will be responsible for their actions

"Many actors are involved in the lifecycle of an AI system," highlight Gauri Sinha and Rupert Dunbar in Regulating Artificial Intelligence in Industry.

"These include the developer, the deployer (the person who uses an AI-equipped product or service), and potentially others (producer, distributor or importer, service provider, professional, or private user)."

The European Commission's view is that responsibility should lie with those best placed to address potential risks:

"While the developers of AI may be best placed to address risks arising from the development phase, their ability to control risks during the use phase may be more limited. In that case, the deployer should be subject to the relevant obligation."

Another potential solution, which bodies including the European Parliament's Committee on Legal Affairs have examined, is to give AI systems legal status as "e-people."

"The most sophisticated autonomous robots could be established as having the status of electronic persons responsible for making good any damage they may cause, and possibly applying electronic personality to cases where robots make autonomous decisions or otherwise interact with third parties independently."

4. The "pacing problem"

We've already highlighted how the rapid pace of innovation is a challenge when defining new technologies.

It's also difficult for regulatory and legal frameworks to evolve as quickly as technological advancements.

This is sometimes known as the "pacing problem."

In "Navigating the governance challenges of disruptive technologies: insights from regulation of autonomous systems in Singapore," Devyani Pande and Araz Taeihagh suggest two ways regulators can deal with this issue:

- Slow the speed of innovation

- Boost the capacity of the legal system to propose timely regulations

Slowing the pace of innovation won't be a practical solution in most scenarios, due to the rivalry between some nations and blocs.

5. Enabling innovation

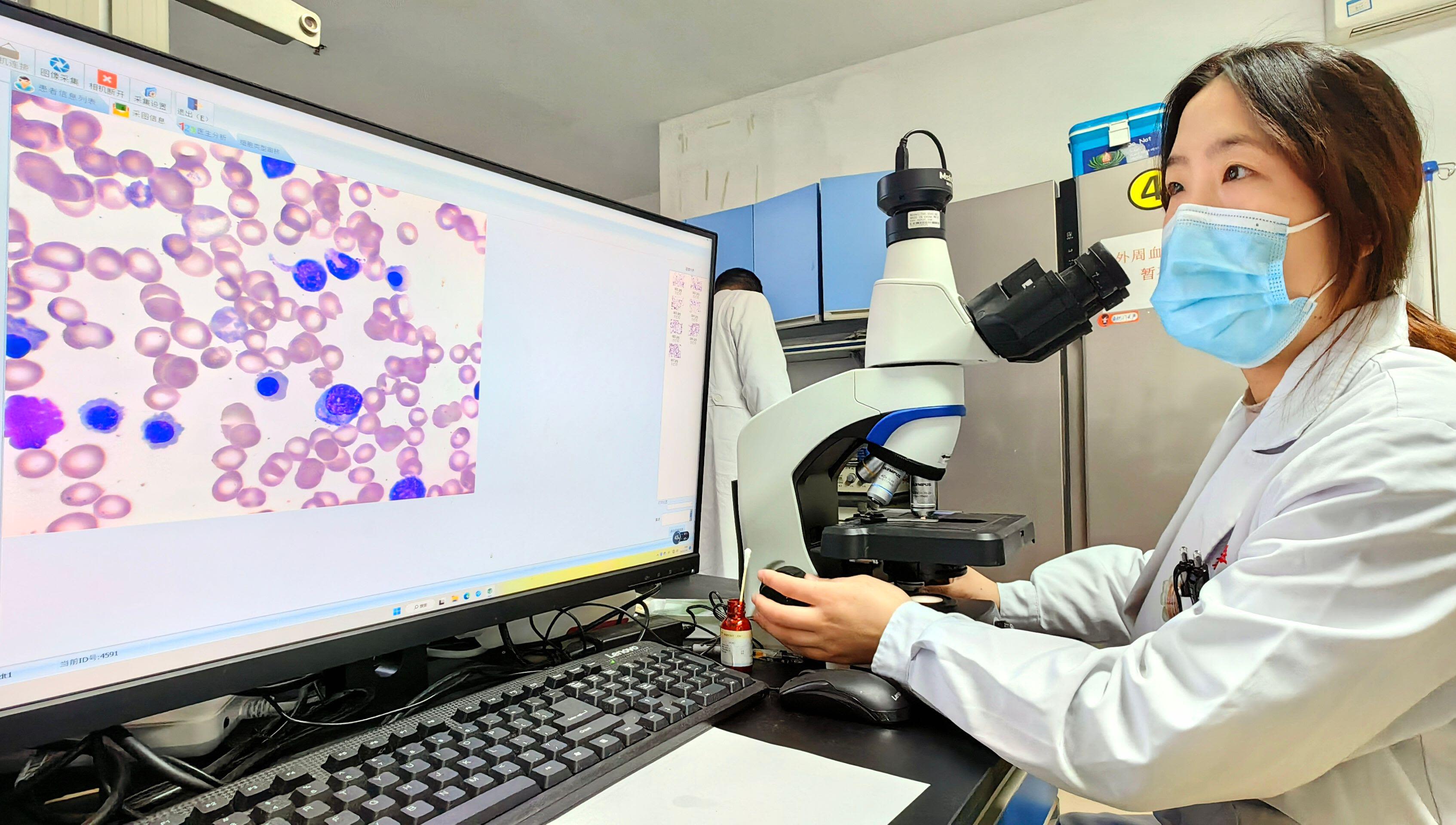

Despite the risks, AI has the potential to provide solutions to some of society's most pressing problems.

In healthcare alone, AI can already do things such as diagnose cancer, predict mortality rates, and help develop drugs and medicines.

However, according to Nicolas Petit and Jerome De Cooman in The Routledge Social Science Handbook of AI, "regulation stifles AI innovation."

Therefore, a challenge for regulators is to manage the social and environmental risks of AI while continuing to enable innovation.

Regulation stifles AI innovation

Regulators should also explore the impact of AI regulation on innovation in related sectors, says Nathalie A. Smuha:

"By advantaging the development of AI above other technologies, incumbent AI companies may gain an (unfair) market advantage, to the disadvantage of others working on the same goal with a different technology."

Four guiding principles for AI regulation

In "Six human-centered artificial intelligence grand challenges," Ozlem Ozmen Garibaya et al. highlight four guiding principles that should inform the regulation of AI:

1. Fairness

E.g., bias-free algorithms, bias audits, inclusivity

2. Integrity

E.g., appropriate use of data, aligning success metrics of AI systems with their desired objectives

3. Resilience

E.g., robust and secure systems

4. Explainability

E.g., transparency of the algorithmic decision-making process, potential to reverse engineer AI decisions and models

Further reading

Journal articles

- Algorithms, data, and platforms: the diverse challenges of governing AI by Mark Nitzberg and John Zysman in the Journal of European Public Policy

- Defining the scope of AI regulations by Jonas Schuett in Law, Innovation, and Technology

- Diversity, equity, and inclusion in artificial intelligence: an evaluation of guidelines by Gaelle Cachat-Rosset and Alain Klarsfeld in Applied Artificial Intelligence

- Evaluating Europe's push to enact AI regulations: how will this influence global norms? by Steven Feldstein in Democratization

- From a 'race to AI' to a 'race to AI regulation': regulatory competition for artificial intelligence by Nathalie A. Smuha in Law, Innovation, and Technology

- Governing artificial intelligence in China and the European Union: Comparing aims and promoting ethical outcomes by Huw Roberts et al. in The Information Society

- Shaping AI's future? China in global AI governance by Jing Cheng and Jinghan Zeng in the Journal of Contemporary China

- Six human-centered artificial intelligence grand challenges by Ozlem Ozmen Garibay et al. in the International Journal of Human–Computer Interaction

- What is the State of Artificial Intelligence Governance Globally? by James Butcher and Irakli Beridze in The RUSI Journal

Books

- Cybersecurity Ethics: An Introduction by Mary Manjikian

- Hidden in White Sight: How AI Empowers and Deepens Systemic Racism by Calvin Lawrence

- Regulating Artificial Intelligence in Industry edited by Damian M. Bielicki

- Robot Souls: Programming in Humanity by Eve Poole

- The Global Politics of Artificial Intelligence edited By Maurizio Tinnirello

- The Routledge Social Science Handbook of AI edited By Anthony Elliott

You might also like:

Insights, blogs, press, and white papers

- Is AI bad for the environment?

- Artificial intelligence in the classroom

- Inspiring women in AI

- Managing the soaring energy demands of generative AI

- What is social justice?

- AI Ethics in Scholarly Communication: STM Best Practice Principles for Ethical, Trustworthy and Human-centric AI (STM)

- We must stop technology-driven AI and focus on human impact first, global experts warn

Social justice and sustainability

Find out about the content we publish, commitments we've made, and initiatives we support related to social justice and sustainability:

China

China Africa

Africa